Article | Report Preview

EAIDB 1H2024 Preview: AI Security

July 27th, 2024

In anticipation of our next semiannual report, we're releasing some of our insights from the AI Security space! Stay tuned for the full report, which is set to be released at the end of August.

Industry Profile

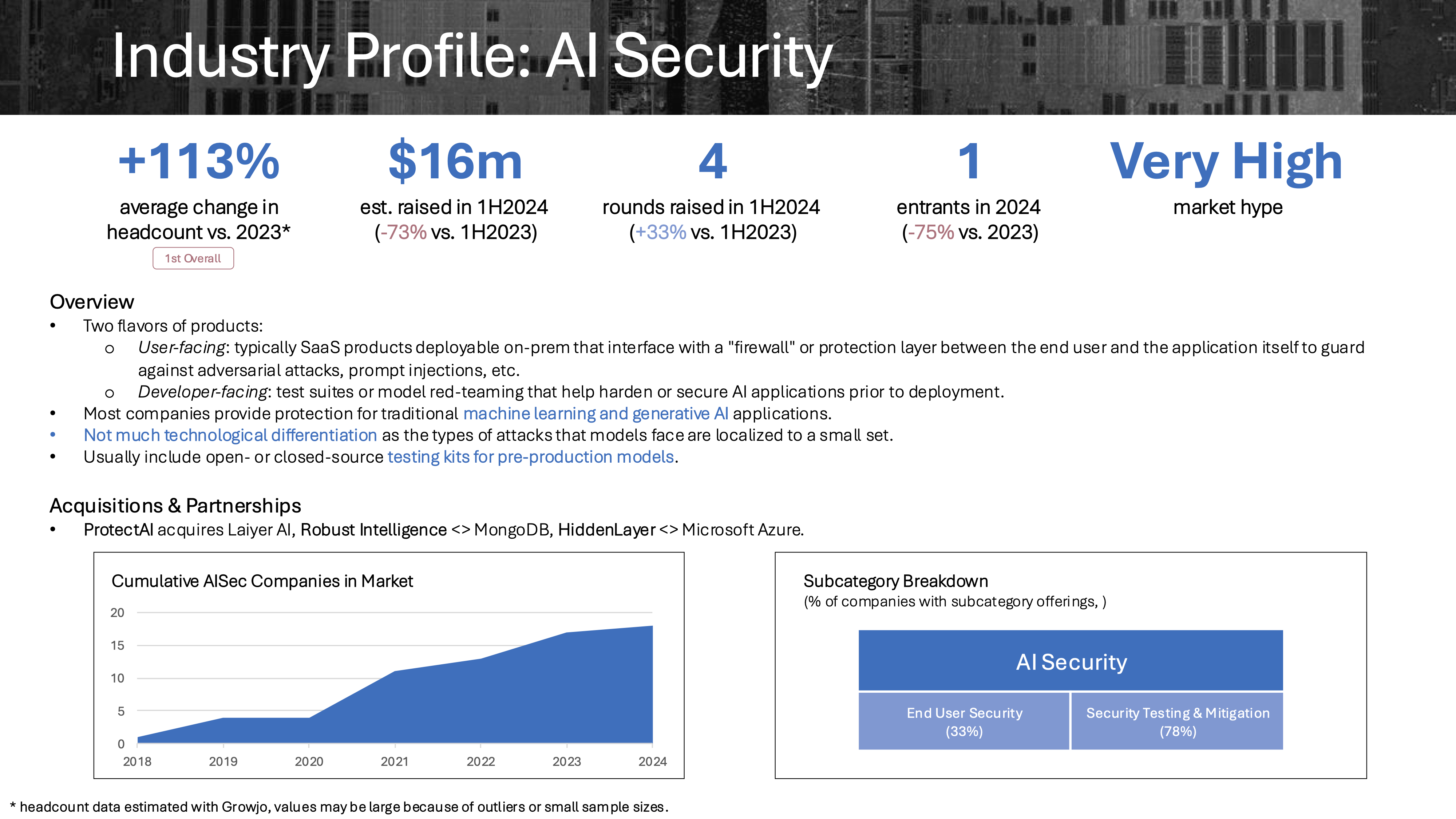

The AI Security space consists of solutions that provide models (traditional machine learning AND generative AI) with protection from malicious attackers. This space does not focus on AI-assisted cybersecurity but rather on security for AI. Despite a tough funding market in 2023, this category managed to command the most funding compared to any other with blockbuster Series A rounds such as Protect AI's $35m round (led by Evolution Equity) and Hiddenlayer's $50m round (led by M12).

Common subcategories or focuses are:

Security Testing & Mitigation: platforms that offer comprehensive pre-production test suites for security operations (e.g., prompt injections, membership inference attacks, penetration testing, etc.) and/or production-grade monitoring and threat mitigation. Examples are Protect AI and Hiddenlayer.

End User Security: platforms or plug-ins that prevent sensitive data leakage in the output of models (e.g., redacting PII if found in an LLM's response) OR guard against improper usage of external AI tools by employees of a company. Examples include Portal26 and Lakera.

Want more? Stay tuned for the next EAIDB report for much more insight, including funding trends, barriers and opportunities, as well as an in-depth profiling of the "winners" in each space.